精通

英语

和

开源

,

擅长

开发

与

培训

,

胸怀四海

第一信赖

VLC是开源媒体播放器、开源播放器和开源流媒体工具,nVCL是国外专家对VLC的C#封装,本文包含nVLC使用说明、nVLC配置、nVLC源代码剖析和nVLC架构剖析,本栏目主要包含国外专家文章的翻译、讨论组的翻译和本人的心得理解。欢迎大家找锐英源合作播放器和流媒体项目。本网页内容主要是作者主文档翻译,其它细节请找技术分类下的链接。

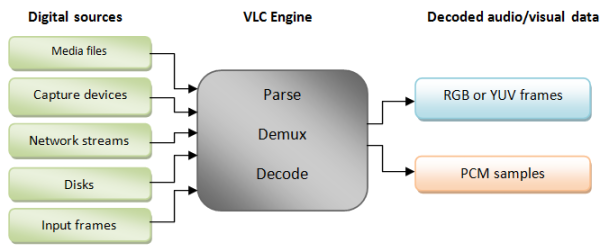

VLC的内置codecs机制让它不再需要后续安装插件,这点非常优秀。libvlc.dll模块是VLC引擎接口,包含丰富的渲染、流媒体和转码功能。Libvlc是原生DLL,它公开了几百个C方法调用。本文提供libVLC的.NET调用接口,这样VLC的的主要功能可以用在托管代码环境下。

VLC 1.1.x引用了几个主要的改进和修改;最吸引人的是GPU解码和不带异常处理的简化 LIBVLC接口。也添加了WebM视频格式支持

为了在托管程序里使用libvlc,它需要被某些互通层封装。可以用三种方法实现:

Since libvlc is a native library which exports pure C methods, P/Invoke is chosen here.因为libvlc是原生库,库导出了纯C接口,所以P/Invoke被选用

If you are planning to enrich your knowledge of P/Invoke, libvlc is a great place to start. It has a large number of structures, unions, and callback functions, and some methods require custom marshalling to handle double pointers and string conversions.它有大量的结构体、联合和回调函数,一些方法需要特别的编组来处理二维指针和字符串转换。

We have to download the VLC source code to better understand the libvlc interface. Please follow this link. After extracting the archive content, go to the <YOUR PATH>\vlc-1.1.4\include\vlc folder:要理解libvlc接口,需要下载vlc源代码阅读下。下载解压后,转到\vlc-1.1.4\include\vlc这个目录

These are the header files for libvlc. In case you want to use them directly in a native (C/C++) application, there is an excellent article explaining that.

libvlc接口的入口点是libvlc_new接口,而libvlc_new接口在libvlc.h头文件中定义:

VLC_PUBLIC_API libvlc_instance_t * libvlc_new( int argc , const char *const *argv );

argv is the double pointer to a set of strings which controls the behavior of the VLC engine, like disabling screensaver, not using any implemented UIs, and so on.argv是个二维指针,指向一个字符串集合,此集合能控制VLC引擎的行为,比如禁止屏保、不使用任何实现的UI等等。

The managed method declaration uses custom marshalling attributes to instruct the .NET runtime how to pass an array of System.String objects to the expected native format:托管方法声明里使用了定制的编组属性来指示.NET运行时怎样去传递System.String数组对象到期待的原生格式:

[DllImport("libvlc")]

public static extern IntPtr libvlc_new(int argc,

[MarshalAs(UnmanagedType.LPArray, ArraySubType = UnmanagedType.LPStr)] string[] argv);

Note that the return type of the method is an IntPtr which holds a reference to the native pointer to the libvlc_instance_t structure.注意方法返回的是IntPtr,它容纳了一个指向libvlc_instance_t结构体的原生指针。

下面是libvlc_log_message_t结构体,它取自 libvlc_structures.h头文件:

typedef struct libvlc_log_message_t

{

unsigned sizeof_msg;

/* sizeof() of message structure,

must be filled in by user */

int i_severity;

/* 0=INFO, 1=ERR, 2=WARN, 3=DBG */

const char *psz_type;

/* module type */

const char *psz_name;

/* module name */

const char *psz_header;

/* optional header */

const char *psz_message;

/* message */

} libvlc_log_message_t;

The managed analog of this structure is pretty straightforward:

[StructLayout(LayoutKind.Sequential)]

public struct libvlc_log_message_t

{

public UInt32 sizeof_msg;

public Int32 i_severity;

public IntPtr psz_type;

public IntPtr psz_name;

public IntPtr psz_header;

public IntPtr psz_message;

}

LayoutKind.Sequential means that all the members of the structure are laid out sequentially in the native memory.LayoutKind.Sequential意味着各个成员在内存中连续紧密分布。

联合体和结构体相似,不过它们的成员定义在同样的内存地址上。这意味着布局必须由运行时编组明确控制,且用FieldOffset属性来实现此目的。

Here is the libvlc_event_t definition from libvlc_events.h:

typedef struct libvlc_event_t

{

int type;

void *p_obj

union

{

/* media descriptor */

struct

{

libvlc_meta_t meta_type;

} media_meta_changed;

struct

{

libvlc_media_t * new_child;

} media_subitem_added;

struct

{

int64_t new_duration;

} media_duration_changed;

…

}

}

It is basically a structure which has two simple members and a union. LayoutKind.Explicit is used to tell the runtime the exact location in memory for each field:LayoutKind.Explicit告诉运行时各个成员内存里的定位。

[StructLayout(LayoutKind.Explicit)]

public struct libvlc_event_t

{

[FieldOffset(0)]

public libvlc_event_e type;

[FieldOffset(4)]

public IntPtr p_obj;

[FieldOffset(8)]

public media_player_time_changed media_player_time_changed;

}

[StructLayout(LayoutKind.Sequential)]

public struct media_player_time_changed

{

public long new_time;

}

If you intent to extend the libvlc_event_t definition with additional values, they must all be decorated with the [FieldOffset(8)] attribute since all of them begin at an offset of 8 bytes.要扩展,必须用[FieldOffset(8)]来修饰。

When the underlying VLC engine has its internal state changed, it uses callback functions to notify whoever subscribed for this kind of change. Subscriptions are made using the libvlc_event_attach API defined in libvlc.h. The API has four parameters:当内部vlc引擎内部状态修改时,它使用回调函数来指示变化订阅者。订阅通过使用libvlc_event_attach函数。它有4个参数:

The callback function pointer is declared in libvlc.h as follows:回调函数指针定义如下:

typedef void ( *libvlc_callback_t )( const struct libvlc_event_t *, void * );

It accepts a pointer to the libvlc_event_t structure and optional user defined data.它有事件结构体指针参数和可选用户定义数据指针。

The managed port is a delegate with the same signature:托管代码移植使用了同样特征的委托

[UnmanagedFunctionPointer(CallingConvention.Cdecl)] private delegate void VlcEventHandlerDelegate( ref libvlc_event_t libvlc_event, IntPtr userData);

Please note that I want to get a reference to the libvlc_event_t structure to access its parameters in the MediaPlayerEventOccured function. Unlike other places where I simply use an IntPtr to pass the pointer among method calls.在MediaPlayerEventOccured函数里我用引用来访问libvlc_event_t结构体对象。其它位置不象这样处理,其它位置只是简单使用了IntPtr。

public EventBroker(IntPtr hMediaPlayer)

{

VlcEventHandlerDelegate callback1 = MediaPlayerEventOccured;

m_hEventMngr = LibVlcMethods.libvlc_media_player_event_manager(hMediaPlayer);

hCallback1 = Marshal.GetFunctionPointerForDelegate(callback1);

m_callbacks.Add(callback1);

GC.KeepAlive(callback1);

}

private void MediaPlayerEventOccured(ref libvlc_event_t libvlc_event, IntPtr userData)

{

switch (libvlc_event.type)

{

case libvlc_event_e.libvlc_MediaPlayerTimeChanged:

RaiseTimeChanged(libvlc_event.media_player_time_changed.new_time);

break;

case libvlc_event_e.libvlc_MediaPlayerEndReached:

RaiseMediaEnded();

break;

}

}

.NET delegate types are managed versions of C callback functions, therefore the System.Runtime.InteropServices.Marshal class contains conversion routines to convert delegates to and from native method calls. After the delegate definition is marshaled to a native function pointer callable from native code, we have to maintain a reference for the managed delegate to prevent it from being deallocated by the GC, since native pointers cannot “hold” a reference to a managed resource.要导入System.Runtime.InteropServices.Marshal类才可以使用回调托管。在委托定义对象被编组到原生函数指针上,能被原生代码调用后,必须维持一个托管委托的引用来阻止GC来回收,因为原生指针不能“容纳”一个托管资源的引用。

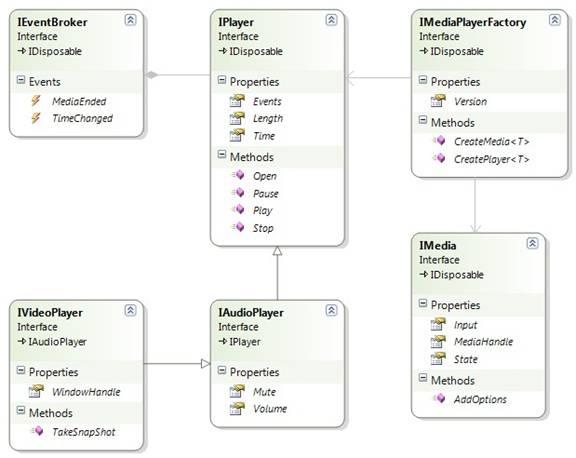

IMediaPlayerFactory - Wraps the libvlc_instance_t handle and is used to create media objects and media player objects.IMediaPlayerFactory封装了libvlc_instance_t句柄用于创建媒体对象和媒体播放器对象。

The implementation of these interfaces is shown below:

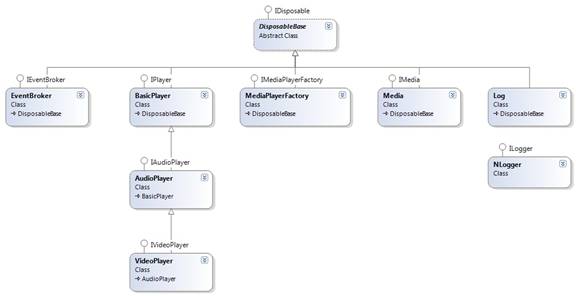

Since each wrapper object holds a reference to native memory, we have to make sure this memory is released when the managed object is reclaimed by the garbage collector. This is done by implicitly or explicitly calling the Dispose method by user code, or by the finalizer when object is deallocated. I wrapped this functionality in the DisposableBase class:因为每个封装对象包含了原生内存的引用,当托管对象被GC回收时,我们必须确保内存会被释放。通过直接或间接调用Dispose方法来实现 。我把它封装到了DisposableBase类里:

public abstract class DisposableBase : IDisposable

{

private bool m_isDisposed;

public void Dispose()

{

if (!m_isDisposed)

{

Dispose(true);

GC.SuppressFinalize(this);

m_isDisposed = true;

}

}

protected abstract void Dispose(bool disposing);

//

if (disposing)

//

{

//

// get rid of managed resources

//

}

//

// get rid of unmanaged resources

~DisposableBase()

{

if (!m_isDisposed)

{

Dispose(false);

m_isDisposed = true;

}

}

protected void VerifyObjectNotDisposed()

{

if (m_isDisposed)

{

throw new ObjectDisposedException(this.GetType().Name);

}

}

}

Each class that inherits from DisposableBase must implement the Dispose method which will be called with a parameter true when invoked by user code, and both managed and unmanaged resources may be released here, or with a parameter false, which means it in invoked by the finalizer and only native resources may be released.每个从DisposableBase类派生的类都要实现Dispose方法,当用户代码invoke调用会触发Dispose的带真参数值调用,且托管和非托管资源必须在此释放,或带假参数调用,则意味着它是被结束器invoke调用,只需要释放原生资源。

VLC implements logging logic in the form of a log iterator, so I decided to implement it also using the Iterator pattern, i.e., using a yield return statement:遍历模式,用yield return语句。

public IEnumerator GetEnumerator()

{

IntPtr i = LibVlcMethods.libvlc_log_get_iterator(m_hLog);

while (LibVlcMethods.libvlc_log_iterator_has_next(i) != 0)

{

libvlc_log_message_t msg = new libvlc_log_message_t();

msg.sizeof_msg = (uint)Marshal.SizeOf(msg);

LibVlcMethods.libvlc_log_iterator_next(i, ref msg);

yield return GetMessage(msg);

}

LibVlcMethods.libvlc_log_iterator_free(i);

LibVlcMethods.libvlc_log_clear(m_hLog);

}

private LogMessage GetMessage(libvlc_log_message_t msg)

{

StringBuilder sb = new StringBuilder();

sb.AppendFormat("{0} ", Marshal.PtrToStringAnsi(msg.psz_header));

sb.AppendFormat("{0} ", Marshal.PtrToStringAnsi(msg.psz_message));

sb.AppendFormat("{0} ", Marshal.PtrToStringAnsi(msg.psz_name));

sb.Append(Marshal.PtrToStringAnsi(msg.psz_type));

return new LogMessage() { Message = sb.ToString(),

Severity = (libvlc_log_messate_t_severity)msg.i_severity };

}

This code is called for each timeout (default is 1 sec), iterates over all existing log messages, and cleans up the log. The actual writing to the log file (or any other target) is implemented using NLog, and you should add a custom configuration section to your app.config for this to work:上面代码每1秒调用一次,遍历出所有日志,且清除。写日志通过NLog实现,向配置文件里要添加如下内容:

<configSections>

<section name="nlog"

type="NLog.Config.ConfigSectionHandler, NLog" />

</configSections>

<nlog xmlns="http://www.nlog-project.org/schemas/NLog.xsd"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<targets>

<target name="file" xsi:type="File"

layout="${longdate} ${level} ${message}"

fileName="${basedir}/logs/logfile.txt"

keepFileOpen="false"

encoding="iso-8859-2" />

</targets>

<rules>

<logger name="*" minlevel="Debug" writeTo="file" />

</rules>

</nlog>

Before running any application using nVLC, you have to download the latest VLC 1.1.x, or a higher version from here. After running the installer, go to C:\Program Files\VideoLAN\VLC and copy the following items to your executable path:在运行前,下载vlc,安装后,把如下文件拷贝到可执行文件目录下:

If any of these is missing at runtime, you will have a DllNotFoundException thrown.

In your code, add a reference to the Declarations and Implementation projects. The first instance you have to construct is the MediaPlayerFactory from which you can construct a media object by calling the CreateMedia function and media player objects by calling the CreatePlayer function.在你的项目里,引用Declarations 和 Implementation项目。先用MediaPlayerFactory创建工厂实例,再用工厂实例调用CreateMedia创建媒体对象,再用工厂实例调用CreatePlayer创建播放器对象

In your code, add a reference to the Declarations and Implementation projects. The first instance you have to construct is the MediaPlayerFactory from which you can construct a media object by calling the CreateMedia function and media player objects by calling the CreatePlayer function.在你的项目里,引用Declarations 和 Implementation项目。先用MediaPlayerFactory创建工厂实例,再用工厂实例调用CreateMedia创建媒体对象,再用工厂实例调用CreatePlayer创建播放器对象

The most basic usage would be a file playback to a specified panel:播放文件是最基本用法,示例如下:

IMediaPlayerFactory factory = new MediaPlayerFactory(); IMedia media = factory.CreateMedia<IMedia>(@"C:\Videos\Movie.wmv"); IVideoPlayer player = factory.CreatePlayer<IVideoPlayer>(); player.WindowHandle = panel1.Handle; player.Open(media); player.Events.MediaEnded += new EventHandler(Events_MediaEnded); player.Events.TimeChanged += new EventHandler<TimeChangedEventArgs>(Events_TimeChanged); player.Play();

VLC has built-in support for DirectShow capture source filters; that means that if you have a web cam or video acquisition card that has a DirectShow filter, it can be used seamlessly by using the libvlc API.内置支持DirectShow捕获源过滤对象。

IMedia media = factory.CreateMedia<IMedia>(@"dshow://", @"dshow-vdev=Trust Webcam 15007");

Note that the media path is always set to dshow:// and the actual video device is specified by the option parameter.媒体路径始终是dshow://,实际的视频设备通过可选参数来指定

VLC supports a wide range of network protocols like UDP, RTP, HTTP, and others. By specifying a media path with a protocol name, IP address, and port, you can capture the stream and render it the same way as opening a local media file:用协议名当媒体名

IMedia media = factory.CreateMedia<IMedia>(@"udp://@172.16.10.1:19005");

Beyond impressive playback capabilities, VLC also acts as a no less impressive streaming engine. Before we jump into the implementation details, I will shortly describe the streaming capabilities of the VLC Media Player.

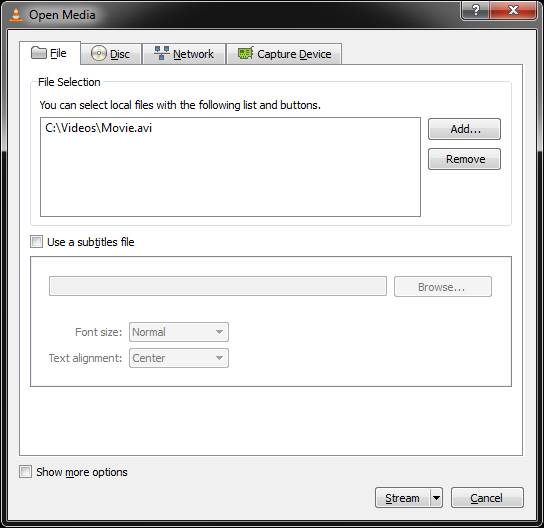

After running VLC, go to Media -> Streaming, the "Open media" dialog is opened, and specify the media you desire to broadcast over the network:

As shown above, you can stream a local file, disk, network stream, or capture device. In this case, I choose a local file and pressed "Stream", and on the next tab, "Next":

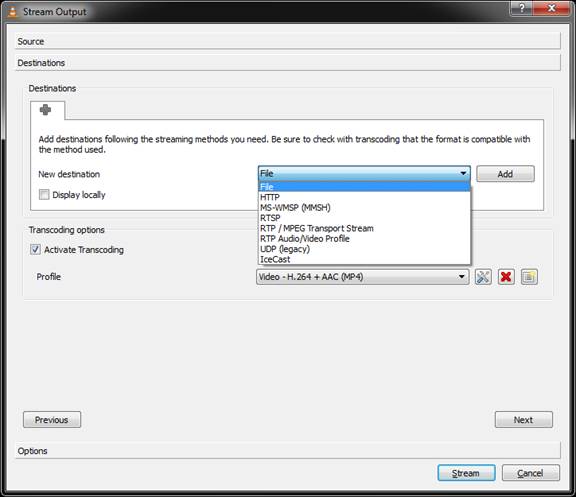

Now you can choose the destination of the previously selected stream. If the "File" option is selected and "Activate Transcoding" is checked, you are simply transcoding (or remultiplexing) the media to a different format. For the sake of simplicity, I chose UDP, pressed "Add", and then specified 127.0.0.1:9000, which means I want to stream the media locally on my machine to port 9000.

Make sure "Activate Transcoding" is checked, and press the "Edit Profile" button:确保选中“激活转码”,

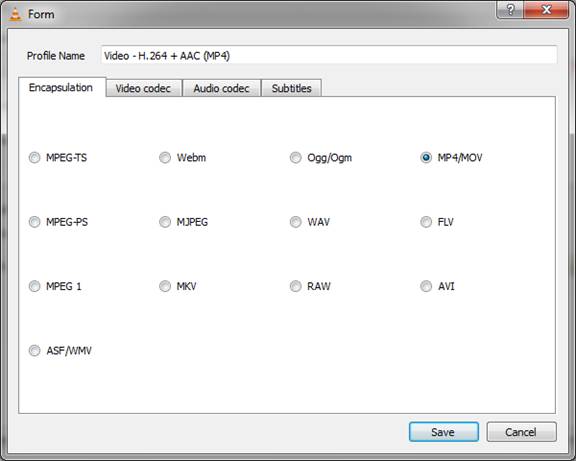

This dialog lets you choose the encapsulation, which is a media container format, a video codec, and an audio codec. The number of possibilities here is huge, and note that not every video and audio format is compatible with each container, but again, for the sake of simplicity, I chose to use the MP4 container with an h264 video encoder and an AAC audio encoder. After pressing "Next", you will have the final dialog with the "Generated stream output string".容器类型并不一定和音频和视频格式兼容。

This is the most important part as this string should be passed to the media object so you can simply copy it and use it in the API as follows:

string output =

@":sout=#transcode{vcodec=h264,vb=0,scale=0,acodec=mp4a,"+

@"ab=128,channels=2,samplerate=44100}:udp{dst=127.0.0.1:9000} ";

IMedia media = factory.CreateMedia(@"C:\Videos\Movie.wmv", output);

IPlayer player = factory.CreatePlayer();

player.Open(media);

player.Play();

This will open the selected movie file, transcode it to the desired format, and stream it over UDP.

通常来说,你会通过传递窗口句柄渲染视频到屏幕上,数据桢会根据媒体时钟来显示到传递窗口上。 LibVLC also allows you to render raw video (pixel data) to a pre-allocated memory buffer. This functionality is implemented by the libvlc_video_set_callbacks and libvlc_video_set_format APIs. IVideoPlayer has a property called CustomRenderer of type IMediaRenderer which wraps these two APIs. LibVLC也允许把原始视频数据(像素数据)渲染到预先分配的内存缓冲上。这是通过libvlc_video_set_callbacks和libvlc_video_set_format函数实现。 IVideoPlayer有个属性叫CustomRenderer,此属性的类型是IMediaRenderer,而IMediaRenderer封装了这2个函数。

/// <summary>

/// Enables custom processing of video frames.

/// </summary>

public interface IMemoryRenderer

{

/// <summary>

/// Sets the callback which invoked when new frame should be displayed

/// </summary>

/// <param name="callback">Callback method</param>

/// <remarks>The frame will be auto-disposed after callback invokation.</remarks>

void SetCallback(NewFrameEventHandler callback);

/// <summary>

/// Gets the latest video frame that was displayed.

/// </summary>

Bitmap CurrentFrame { get; }

/// <summary>

/// Sets the bitmap format for the callback.

/// </summary>

/// <param name="format">Bitmap format of the video frame</param>

void SetFormat(BitmapFormat format);

/// <summary>

/// Gets the actual frame rate of the rendering.

/// </summary>

int ActualFrameRate { get; }

}

You have two options for frame processing:桢处理时有2个选项:

Callback回调

By calling the SetCallback method, your callback will be invoked when a new frame is ready to be displayed. The System.Drawing.Bitmap object passed to the callback method is valid only inside a callback; afterwards it is disposed, so you have to clone it if you plan to use it elsewhere. Also note that the callback code must be extremely efficient; otherwise, the playback will be delayed and frames may be dropped. For instance, if you are rendering a 30 frames per second video, you have a time slot of approximately 33 ms between frames. You can test for performance degradation by comparing the values of IVideoPlayer.FPS and the IMemoryRenderer.ActualFrameRate. The following code snippet demonstrates rendering of 4CIF frames in RGB24 format:通过调用SetCallback函数,你的回调函数能在桢准备显示时调用。传递给回调函数的System.Drawing.Bitmap对象只在回调函数内部生效;在它释放后,如果你想在后续使用,就必须克隆它。同样注意,回调代码必须非常高效;否则,回调会延迟且桢会丢失。通过比较IVideoPlayer.FPS和IMemoryRenderer.ActualFrameRate来跟踪这种性能下降。下面代码演示了以RGB24格式渲染4CIF桢:

IMediaPlayerFactory factory = new MediaPlayerFactory();

IVideoPlayer player = player = factory.CreatePlayer<IVideoPlayer>();

IMedia media = factory.CreateMedia<IMedia>(@"C:\MyVideoFile.avi");

IMemoryRenderer memRender = player.CustomRenderer;

memRender.SetCallback(delegate(Bitmap frame)

{

// Do somethingwith the bitmap

});

memRender.SetFormat(new BitmapFormat(704, 576, ChromaType.RV24));

player.Open(media);

player.Play();

Get frame获取桢

If you want to query for frames at your own pace, you should use the CurrentFrame property. It will return the latest frame that was scheduled for display. It is your own responsibility to free its resources after you are done with it.要自己负责释放资源。

IMediaPlayerFactory factory = new MediaPlayerFactory();

IVideoPlayer player = player = factory.CreatePlayer<IVideoPlayer>();

IMedia media = factory.CreateMedia<IMedia>(@"C:\MyVideoFile.avi");

IMemoryRenderer memRender = player.CustomRenderer;

memRender.SetFormat(new BitmapFormat(704, 576, ChromaType.RV24));

player.Open(media);

player.Play();

private void OnTimer(IMemoryRenderer memRender)

{

Bitmap bmp = memRender.CurrentFrame;

// Do something with the bitmap

bmp.Dispose();

}

The SetFormat method accepts a BitmapFormat object which encapsulates the frame size and pixel format. Bytes per pixel, size of the frame, and pitch (or stride) are calculated internally according to the ChromaType value.SetFormat接受个BitmapFormat对象,此对象封装了桢大小和像素格式。每像素字节数、桢长度和间距(或步幅)根据ChromaType值在内部计算。

The IVideoPlayer may operate either in on-screen rendering mode or memory rendering mode. Once you set it to memory rendering mode by calling the CustomRenderer property, you will not see any video on screen.

从libVLC 1.2.0开始,能用VLC引擎来输出解码音频和视频数据,进而可以定制处理,也就是说,输入任何编码过且复合过的媒体且以解码过的视频桢和音频采样为输出。The format of audio and video samples can be set before playback starts, as well as video size视频大小, pixel alignment像素对齐, audio format音频格式, number of channels通道个数, and more. When playback starts, the appropriate callback function will be invoked for each video frame upon its display time and for a given number of audio samples by their playback time. This gives you, as a developer, great flexibility since you can apply different image and sound processing algorithms and, if needed, eventually render the audio visual data.这种灵活的回调机制,可以让你把音频数据也渲染为图形模式。

libVLC exposes this advanced functionality through the libvlc_video_set_*** and libvlc_audio_set_*** set of APIs. In the nVLC project, video functionality is exposed though the ICustomRendererEx interface:

/// <summary>

/// Contains methods for setting custom processing of video frames.

/// </summary>

public interface IMemoryRendererEx

{

/// <summary>

/// Sets the callback which invoked when new frame should be displayed

/// </summary>

/// <param name="callback">Callback method</param>

void SetCallback(NewFrameDataEventHandler callback);

/// <summary>

/// Gets the latest video frame that was displayed.

/// </summary>

PlanarFrame CurrentFrame { get; }

/// <summary>

/// Sets the callback invoked before the media playback starts

/// to set the desired frame format.

/// </summary>

/// <param name="setupCallback"></param>

/// <remarks>If not set, original

media format will be used</remarks>

void SetFormatSetupCallback(Func<BitmapFormat,

BitmapFormat> setupCallback);

/// <summary>

/// Gets the actual frame rate of the rendering.

/// </summary>

int ActualFrameRate { get; }

}

and audio samples can be accessed through the CustomAudioRenderer property of the IAduioPlayer object:

/// <summary>

/// Enables custom processing of audio samples

/// </summary>

public interface IAudioRenderer

{

/// <summary>

/// Sets callback methods for volume change and audio samples playback

/// </summary>

/// <param name="volume">Callback method invoked

/// when volume changed or muted</param>

/// <param name="sound">Callback method invoked when

/// new audio samples should be played</param>

void SetCallbacks(VolumeChangedEventHandler volume, NewSoundEventHandler sound);

/// <summary>

/// Sets audio format

/// </summary>

/// <param name="format"></param>

/// <remarks>Mutually exclusive with SetFormatCallback</remarks>

void SetFormat(SoundFormat format);

/// <summary>

/// Sets audio format callback, to get/set format before playback starts

/// </summary>

/// <param name="formatSetup"></param>

/// <remarks>Mutually exclusive with SetFormat</remarks>

void SetFormatCallback(Func<SoundFormat, SoundFormat> formatSetup);

}

To make the task of rendering video samples and playing audio samples easier, I developed a small library called Taygeta. It started as a testing application for the nVLC features, but since I liked it so much I decided to convert it to a standalone project. It uses Direct3D for hardware accelerated video rendering, and XAudio2 for audio playback. It also contains a sample application with all the previously described functionality.为了渲染易,我开发了Taygeta。。它使用Direct3D来进行硬件加速视频渲染,且XAudio2来音频播放。

在上节的介绍中,VLC为不同媒体提供了很多存取模块。When any of those satisfies your requirements, and you need, for example to capture a window contents or stream 3D scene to another machine, memory input will do the work as it provides interface for streaming media from a memory buffer. libVLC contains 2 modules for memory input: invmem and imem. The problem is that both of them not exposed by the libVLC API and one has to put some real effort to make them work, especially from managed code.

Invmem was deprecated in libVLC 1.2 so I will not describe it here. It is exposed via IVideoInputMedia object and you can search the "Comments and Discussions" forum for usage examples.

Imem, on the other hand, is still supported and exposed by IMemoryInputMedia object:

/// <summary>

/// Enables elementary stream (audio, video, subtitles or data) frames insertion into VLC engine

(based on imem access module)

/// </summary>

public interface IMemoryInputMedia : IMedia

{

/// <summary>

/// Initializes instance of the media object with stream information and frames' queue size

/// </summary>

/// <param name="streamInfo"></param>

/// <param name="maxFramesInQueue">Maximum items in the queue. If the queue is full any AddFrame overload

/// will block until queue slot becomes available</param>

void Initialize(StreamInfo streamInfo, int maxItemsInQueue = 30);

/// <summary>

/// Add frame of elementary stream data from memory on native heap

/// </summary>

/// <param name="streamInfo"></param>

/// <remarks>This function copies frame data to internal buffer, so native memory may be safely freed</remarks>

void AddFrame(FrameData frame);

/// <summary>

/// Add frame of elementary stream data from memory on managed heap

/// </summary>

/// <param name="data"></param>

/// <param name="pts">Presentation time stamp</param>

/// <param name="dts">Decoding time stamp. -1 for unknown</param>

/// <remarks>Time origin for both pts and dts is 0</remarks>

void AddFrame(byte[] data, long pts, long dts = -1);

/// <summary>

/// Add frame of video stream from System.Drawing.Bitmap object

/// </summary>

/// <param name="bitmap"></param>

/// <param name="pts">Presentation time stamp</param>

/// <param name="dts">Decoding time stamp. -1 for unknown</param>

/// <remarks>Time origin for both pts and dts is 0</remarks>

/// <remarks>This function copies bitmap data to internal buffer, so bitmap may be safely disposed</remarks>

void AddFrame(Bitmap bitmap, long pts, long dts = -1);

/// <summary>

/// Sets handler for exceptions thrown by background threads

/// </summary>

/// <param name="handler"></param>

void SetExceptionHandler(Action<Exception> handler);

/// <summary>

/// Gets number of pending frames in queue

/// </summary>

int PendingFramesCount { get; }

}

The interface provides 3 AddFrame overloads which take frame data from pointer on native heap, managed byte array or Bitmap object. Each method copies the data to internal structure and stores it in frame queue. Therefore, after calling AddFrame you can release frame resources. Once you initialize the IMemoryInputMediaand call play on the media player object, VLC launches playback thread which runs infinite loop. Inside the loop it fetches a frame of data and pushes them as quick as possible to the downstream modules.

To support this paradigm I created producer/consumer queue to hold media frames. The queue is BlockingCollection which perfectly suits the needs of this module: it blocks the producer thread if the queue is full and blocks the consumer thread when queue is empty. The queue size default is 30 so it caches approximately 1 second of video. This cache allows smooth video playback. Take into account that increasing the queue size will impact on your memory usage – 1 frame of HD video (1920x 1080) at BGR24 occupies 5.93 MB. If you have frame rate control over your media source, you can periodically check for number of pending frames in queue and increase or decrease the rate.为了支持这个对接,我创建了生产者/消费者队列来容纳媒体桢。队列是BlockingCollection,它非常适合这个模块:在队列满时,阻塞生产者线程,当队列空时,阻塞消费者线程。队列大小默认是30,所以它缓冲了大约1秒视频数据。这个缓冲能平滑视频播放。扩大队列大小会影响内存使用-1桢RGB24格式的HD视频(1920x1080)占5.93MB。如果你有桢率控制,你可以定期检查队列内未决桢,且增加和降低速率。

DTS and PTS value used to notify libVLC engine when the frame should be handled by the decoder – decoding time stamp, and when the frame should be presented by the renderer – presentation time stamp. The default value for DTS is -1 which means don't use it and use only the PTS. This is useful when using raw video frames like BGR24 or I420 which go directly to rendering so no need for decoding. PTS are a must value and if you don't have it along with your media frames they can be easily calculated by using FPS of your media source and a frame counting number:DTS和PTS值用于通知VLC引擎是时间让解码器处理桢了-解码时间戳,渲染器是否渲染根据PTS渲染时间戳。DTS默认值是-1,这表示不使用DTS只使用PTS。当使用原始视频桢,比如BGR24或I420时,这些会直接渲染不需要解码。PTS是必须值且如果你从媒体桢内得不到时,你能用FPS和桢计数来轻易地计算出它。

long frameNumber = 0; long frameIntervalInMicroSeconds = 1000000 / FrameRate; long PTS = ++frameNumber * frameIntervalInMicroSeconds;

This will give the value of PTS in microseconds for the value of the rendered frame.这会得到和渲染桢对应的以毫秒计的PTS值,

Using the code is the same as any other media instance:

StreamInfo fInfo = new StreamInfo();

fInfo.Category = StreamCategory.Video;

fInfo.Codec = VideoCodecs.BGR24;

fInfo.FPS = FrameRate;

fInfo.Width = Width;

fInfo.Height = Height;

IMemoryInputMedia m_iMem = m_factory.CreateMedia<IMemoryInputMedia>(MediaStrings.IMEM);

m_iMem.Initialize(fInfo);

m_player.Open(m_iMem);

m_player.Play();

...

private void OnYourMediaSourceCallback(MediaFrame frame)

{

ar fdata = new FrameData() { Data = frame.Data, DataSize = frame.DataSize, DTS = -1, PTS = frame.PTS };

m_iMem.AddFrame(fdata);

frame.Dispose();

}

用完后不要忘记析构媒体对象,也要释放所有未决桢。